I wrote a couple years ago that the move to Apple Silicon (and accompanying lock-down of their hardware ecosystem) was a last straw for me as a Mac user (and long-time fanboy.) I still have some Macs, but haven’t — and will not — moved beyond Intel:

An Intel Mac Mini provides our home web and media server, Nic’s laptop is an Intel MacBook, and my “big iron” workstation is a heavily upgraded Intel Mac Pro 4,1 (with 5,1 firmware). But none of those are daily drivers for me, so since Apple’s big move, I’ve been on the hunt for alternate hardware.

Admittedly, its hard to top Apple’s build quality and industrial design, but the legendary ThinkPad used to provide a real competitor to the PowerBook, and my experiences with that lineup were always positive. Of course, IBM doesn’t make the ThinkPad any more — they sold the brand to Lenovo, but that company has proven a good steward.

I now have 3 ThinkPad devices — all circa 2016-18. Each are nicely designed, with comfortable keyboards, and rugged exteriors. Unlike modern MacBooks, all are eminently repairable — the bottom comes off easily, usually with captive screws, giving ready access to the storage, RAM and in two-out-of-three cases, the battery. My smallest ThinkPad, a X1 Tablet, is a lot like the Microsoft Surface (itself an entirely un-repairable device) and it took a little more delicacy to replace its battery — but at least a dead battery didn’t render the entire machine garbage.

Aside from the highly portable tablet, my work machine is a X1 Yoga, with a 180 degree hinge that lets you use it as a laptop or a tablet, and my personal dev box is a X1 Carbon. The Carbon makes an excellent Linux machine, with 100% of the hardware supported by Lenovo themselves (save for the finger-print reader, which took a little effort, but works fine), and all-day battery life.

The X1 line-up does signify a higher-end positioning — I’m sure the consumer-grade devices are less premium, and of course, I can’t speak to the software preload if you buy new (and really, you shouldn’t — these are 10+ year machines) — but Lenovo’s update software is helpful after a fresh OS install, unobtrusive, and easily removed. Only my oldest X1 is officially out-of-support, the rest are still getting BIOS, firmware and driver updates.

I wanted to buy a Framework tablet, because I love the idea of a company focusing on modularity and serviceability, but for the time being, they’re out of my price range. For less than the price of one new machine, I was able to refurbish 3 older, but high-end ThinkPads for different uses.

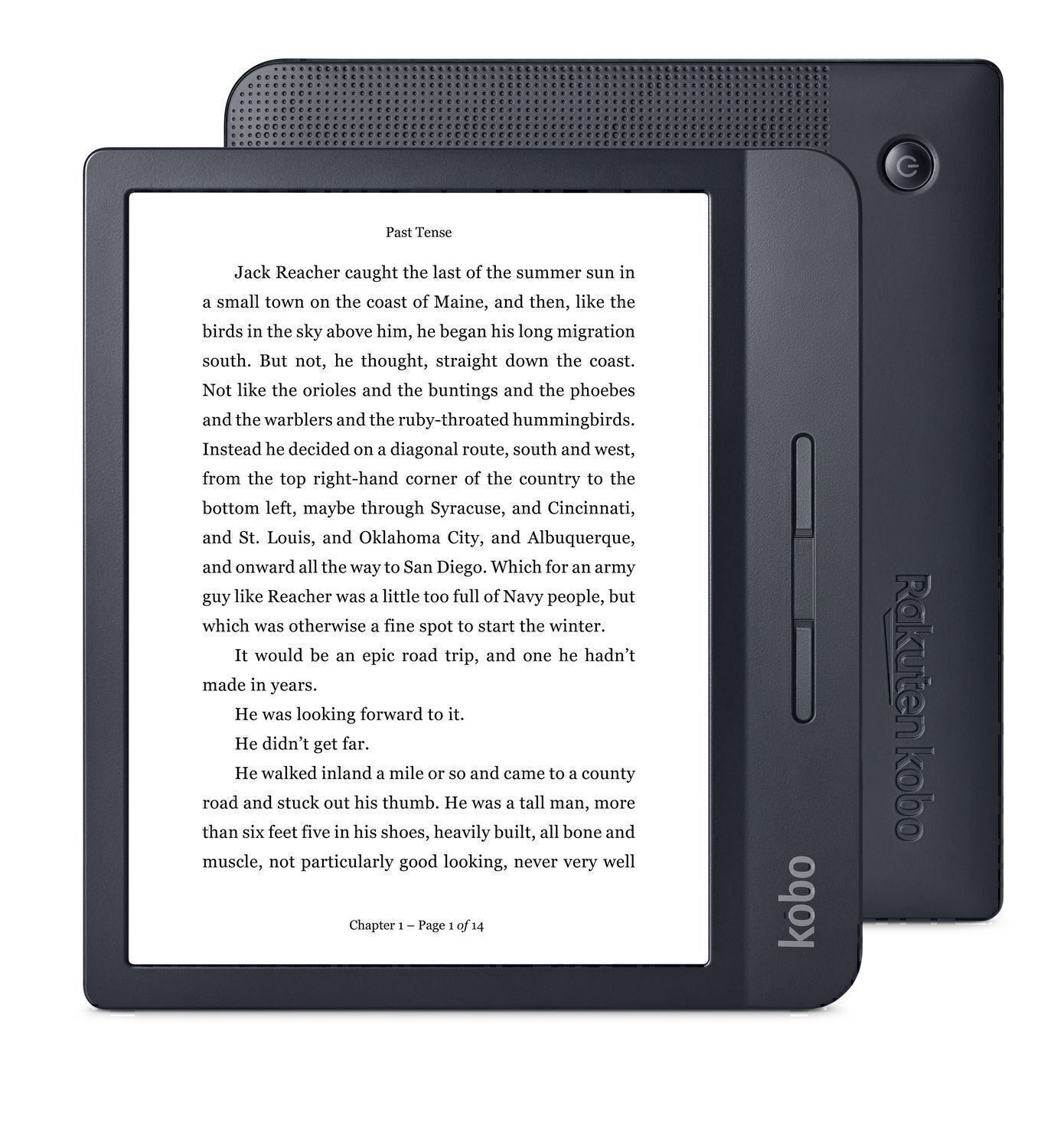

While we’re on the topic of eschewing popular hardware, Kobo is worthy of an honorable mention. Having worked on Kindle, and being a user for so long, I have to admit that I was largely unaware that alternatives existed. But as the battery of my beloved Oasis 1 (the two-part Kindle with removable battery cover, code-named Whisky-Soda) began to die, I decided to try an alternative that fed less into Amazon’s push toward world-domination.

While we’re on the topic of eschewing popular hardware, Kobo is worthy of an honorable mention. Having worked on Kindle, and being a user for so long, I have to admit that I was largely unaware that alternatives existed. But as the battery of my beloved Oasis 1 (the two-part Kindle with removable battery cover, code-named Whisky-Soda) began to die, I decided to try an alternative that fed less into Amazon’s push toward world-domination.

Kobo is backed by a Canadian company. Their hardware is perhaps a little less premium than the incumbent, but a used H2O (water-proof) is entirely satisfactory physically, and its software is quite delightful. Open-format ePub books are natively supported, opening up a wide variety of alternate content providers, and its Public Library integration is excellent. From the device you can browse your library, take out, and even renew books.

The accompanying mobile app, so you can sync up and read from your phone, is maybe not as good as Amazon’s, but it does the job. And since I can read ePub natively, there’s many other platforms I can read on as well.

Reading on Kobo isn’t really much more friction than reading on Kindle, but I understand if its not for everyone. Kindle is a great platform — as long as you can rationalize the behavior of its parent company. X1 ThinkPads, however, get my unqualified endorsement as a great portable computing platform for anyone. They’re perhaps a little bland, compared to a flashier Mac, but they’re dignified, easily repaired, and built in a way that promises a long, reliable, and no-nonsense useful life. Next time you’re in the market for a computer, consider buying something you won’t have to throw away when its battery dies…

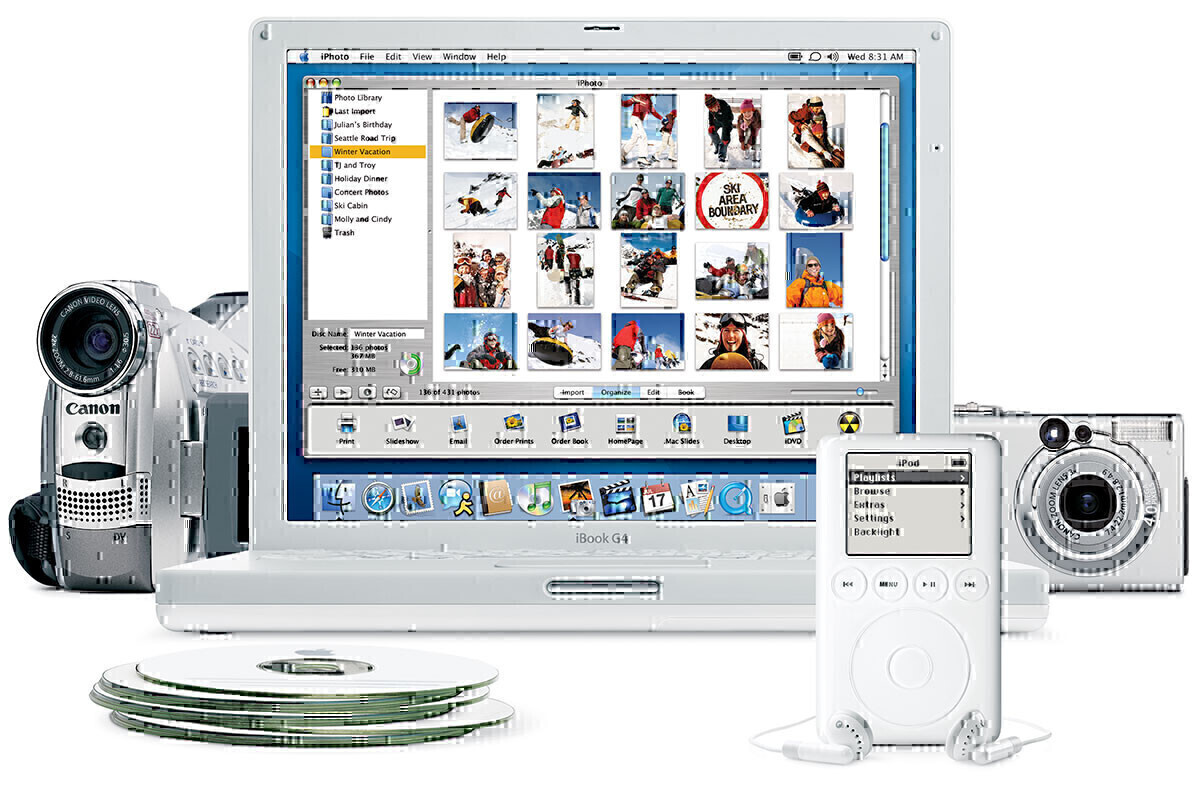

Since I first started out archiving photos, a number of technology solutions have come along claiming to be able to do it better. Flickr, Google Photos, iPhoto, Amazon Photos all made bold assertions that they could automatically organize photos for you, in exchange for a small fee for storing them. The automatic organization always sucked, and the fees were usually part of an ecosystem lock-in play. It seems nothing has been able to beat hierarchical directory trees yet.

Since I first started out archiving photos, a number of technology solutions have come along claiming to be able to do it better. Flickr, Google Photos, iPhoto, Amazon Photos all made bold assertions that they could automatically organize photos for you, in exchange for a small fee for storing them. The automatic organization always sucked, and the fees were usually part of an ecosystem lock-in play. It seems nothing has been able to beat hierarchical directory trees yet.

Right now, our total synced storage needs for the family are under 300GB. I have another terabyte of historical software, a selection of which will remain in a free OneDrive account. The complete 1.3TB backup is on dual hard drives, one always

Right now, our total synced storage needs for the family are under 300GB. I have another terabyte of historical software, a selection of which will remain in a free OneDrive account. The complete 1.3TB backup is on dual hard drives, one always  cheap, or terribly different looking, but its a good effort at making a repairable handset. The

cheap, or terribly different looking, but its a good effort at making a repairable handset. The

You can do it now, although the output is significantly less meaningful or helpful on the modern web. Dig around your Browser’s menus and it’s still there somewhere: the opportunity to examine the source code of this, and other websites.

You can do it now, although the output is significantly less meaningful or helpful on the modern web. Dig around your Browser’s menus and it’s still there somewhere: the opportunity to examine the source code of this, and other websites.

The swan song of the Apple II platform was called the IIGS (the letters stood for Graphics and Sound), which supported most of the original Apple II software line-up, but introduced a GUI based on the Macintosh OS — except in color! The GS was packed with typical Wozniak wizardry, relying less on brute computing power (since Jobs insisted its clock speed be limited to avoid competing with the Macintosh) and more on clever tricks to coax almost magical capabilities out of the hardware (just try using a GS without its companion monitor, and you’ll see how much finesse went into making the hardware shine!)

The swan song of the Apple II platform was called the IIGS (the letters stood for Graphics and Sound), which supported most of the original Apple II software line-up, but introduced a GUI based on the Macintosh OS — except in color! The GS was packed with typical Wozniak wizardry, relying less on brute computing power (since Jobs insisted its clock speed be limited to avoid competing with the Macintosh) and more on clever tricks to coax almost magical capabilities out of the hardware (just try using a GS without its companion monitor, and you’ll see how much finesse went into making the hardware shine!)